Ying Wang

I am a PhD student in the CILVR Lab at NYU Center for Data Science, working with Prof. Mengye Ren and Prof. Yann LeCun. I’m interested in reasoning, planning and multimodal learning.

Prior to starting my PhD, I earned an MS in Data Science at NYU as well and a BS in Computer Science, Statistics, and Finance at McGill University.

yw3076 [at] nyu [dot] edu | Google Scholar | X(Twitter) | LinkedIn

News

| Sep 1, 2024 | I started my PhD at NYU 💜💜💜 |

|---|---|

| Jun 13, 2024 | Our team has won the third place in the Ego4D EgoSchema challenge. |

| Jun 3, 2024 | Started my summer internship as a research scientist at Meta (Video recommendation), Bellevue. |

| Oct 20, 2023 | Received Travel Award for NeurIPS 2023! See you in New Orleans ⚜️ |

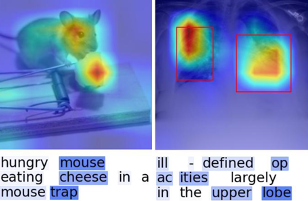

| Sep 21, 2023 | Our work, Visual Explanations of Image-Text Representations via Multi-Modal Information Bottleneck Attribution, is accepted by NeurIPS 2023. |